Model benchmarks of tools often tend to optimize for the wrong outcome like ranking Claude models by peak performance on curated tests, which creates a predictable failure mode. A case in point is when developers default to Opus for routine work that never approaches its reasoning ceiling, then hit usage limits and lose access entirely.

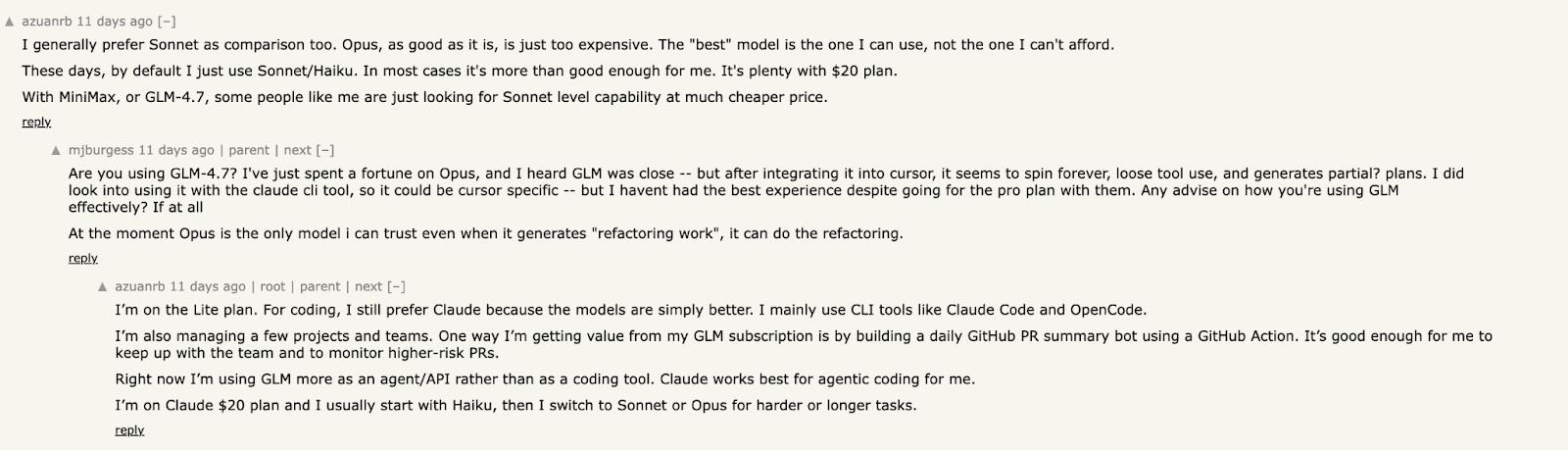

A developer described what this looks like in practice. "I'm on Claude $20 plan and I usually start with Haiku, then I switch to Sonnet or Opus for harder or longer tasks." Someone asked why they don't use Opus more, since it can actually cost less per successful task.

The constraint that mattered wasn't efficiency. "If I use Opus and it's not as efficient for my current tasks, I'll burn through my limit pretty fast. Ended up to where I couldn’t use it any anymore for the next few hours." They measure cost per task, not per token. Opus sometimes proves cheaper by completing refactoring correctly on the first attempt. But availability trumps efficiency. "Whereas with Sonnet/Haiku, I'm much more guaranteed to have 100% AI assistance throughout my coding session. This matters more to me right now."

Their summary. "The 'best' model is the one I can use, not the one I can't afford."

Another developer had the opposite constraint. They'd spent heavily on Opus because "at the moment Opus is the only model I can trust even when it generates 'refactoring work', it can do the refactoring."

The benchmark-driven mental model breaks here. It assumes the highest-ranked model is the best choice, which holds only if task difficulty consistently approaches the capability ceiling. For work where most tasks don't require frontier reasoning, optimizing for peak capability guarantees you'll hit usage limits on routine work. For work where failed attempts are expensive, optimizing for cost guarantees you'll waste time debugging.

Overall, benchmarks measure what's rankable. They don't measure the distribution of tasks in your actual workflow, or which constraint binds first under real usage patterns. That gap explains why two developers analyzing the same models reach opposite conclusions.

This article examines where each Claude model's capabilities justify their costs.

Claude Opus vs. Sonnet vs. Haiku: Which Model Should You Use for Coding?

The model selection for coding depends on where complexity lives in your task. Opus handles architectural reasoning and multi-file refactoring where context windows matter. Sonnet balances depth of reasoning with response speed in most production workflows. Haiku completes patterns quickly when the solution space is well-defined and the local context is sufficient.

Here are the biggest differences between the Claude models:

Sources: Anthropic Pricing, Microsoft Azure, Anthropic Haiku 4.5

SWE-bench Verified

SWE-bench Verified measures how well models solve real bugs from open-source GitHub repositories. It's not a synthetic test, these are actual issues that human engineers fixed, and the model needs to generate the correct patch.

Opus 4.5 scores 80.9%, meaning it successfully resolves about 8 out of 10 real bugs. Sonnet 4.5 achieves 77.2% in standard mode, jumping to 82% when running multiple attempts in parallel. Haiku 4.5 reaches 73.3%. The 7.6 percentage point gap between Opus and Haiku represents the tradeoff between speed and success rate. For most debugging tasks, that gap matters less than whether the model understands your specific codebase context.

Input pricing

Input pricing measures the cost per million tokens of code or text you send to the model. This includes your prompts, the code files you want analyzed, and any context you provide.

Opus 4.5 costs $5 per million input tokens. Sonnet 4.5 runs $3 per million. Haiku 4.5 charges $1 per million. When you're sending large codebases for analysis or providing extensive documentation, input costs can exceed output costs. Haiku's 5x cost advantage in input makes it economical for high-volume scenarios where you repeatedly analyze the same codebase.

Output pricing

Output pricing measures the cost per million tokens the model generates. This is the code it writes, the explanations it provides, and any reasoning it shows.

Opus 4.5 costs $25 per million output tokens. Sonnet 4.5 runs $15 per million. Haiku 4.5 charges $5 per million. Output costs typically exceed input costs because generated code is usually longer than the prompts that request it. The 5x price difference between Opus and Haiku compounds when you're generating complete implementations rather than small fixes.

Context window

Context window defines how much information the model can process in a single request. This includes your prompt, relevant code files, documentation, and conversation history.

All three models support 200,000 tokens (roughly 150,000 words or about 600 pages of code). Sonnet 4.5 offers an experimental 1-million-token window for analyzing entire large codebases or processing massive documentation sets. Context window parity means that all three models can see the same amount of code. The difference is how effectively they reason about that information.

Max output tokens

Max output tokens defines the longest response the model can generate in a single completion. This matters when generating complete files, extensive documentation, or long explanations.

Opus 4.5 can generate up to 32,000 tokens in one response. Both Sonnet 4.5 and Haiku 4.5 support 64,000 tokens. This means Sonnet and Haiku can generate twice as much code or documentation in a single response, reducing the need to break large files into multiple requests.

Extended thinking

Extended thinking allows models to show their reasoning process before generating the final answer. The model works through the problem step-by-step, exploring different approaches and catching potential errors before committing to a solution.

All three models support extended thinking. When enabled, you see the model's internal reasoning, which helps debug why it chose a particular approach. The thinking process uses additional tokens billed at output rates, but it often prevents errors that would cost more time to fix later.

Relative speed

Relative speed measures how quickly the model generates responses. This affects both time-to-first-token (how long it takes to see the start of the response) and overall throughput (how quickly the complete response arrives).

Haiku 4.5 is the fastest, generating responses almost instantly. Sonnet 4.5 is fast, typically providing answers within seconds. Opus 4.5 is moderate, taking longer to produce responses but applying deeper analysis. Haiku runs 4-5 times faster than Sonnet at a fraction of the cost. Speed matters differently depending on workflow: for rapid iteration, Haiku maintains momentum; for complex analysis, Opus's thoroughness prevents downstream mistakes.

Model ID

Model ID is the exact identifier you use in API calls to specify which model processes your request. Using the full versioned identifier (rather than an alias) ensures consistent behavior across deployments.

Opus 4.5 uses claude-opus-4-5-20251101. Sonnet 4.5 uses claude-sonnet-4-5-20250929. Haiku 4.5 uses claude-haiku-4-5-20251001. The date suffix indicates the model snapshot version. Aliases like claude-opus-4-5 automatically point to the latest snapshot, but production applications should use the full versioned identifier to prevent unexpected behavior when new snapshots released.

When each model excels at coding

The Claude model selection depends on where complexity lives in your workflow.

Opus handles architectural decisions where missing an edge case costs hours of debugging, Sonnet balances reasoning depth with response speed for daily development work, and Haiku completes well-defined tasks when the solution space is clear and iteration speed matters more than exhaustive analysis.

Claude Haiku 4.5: The Workhorse

Best for: Code review, documentation, linting, test generation, sub-agent tasks

Claude Sonnet 4.5: The Balanced Default

Best for: Multi-file changes, debugging, architecture decisions, code migration

Official recommendation: "If you're unsure which model to use, we recommend starting with Claude Sonnet 4.5." — Anthropic Documentation

Claude Opus 4.5: The Specialist

Best for: Large-scale refactoring, multi-agent orchestration, novel problem-solving, high-stakes code

According to Claude Code documentation, the product itself uses intelligent model routing:

"In planning mode, automatically uses opus for complex reasoning. In execution mode, it automatically switches to Sonnet for code generation and implementation. This gives you the best of both worlds: Opus's superior reasoning for planning, and Sonnet's efficiency for execution."

Model selection decision framework

Choosing the right model requires evaluating task complexity, error cost, and iteration frequency rather than defaulting to the most capable or cheapest option.

Step 1: categorize by task complexity

Task complexity determines whether you need pattern completion or architectural reasoning, where the cost of using an underpowered model is measured in debugging time rather than API charges.

Step 2: Benchmark Against Your Codebase

SWE-bench uses Python repositories from major open-source projects. Your codebase may have different characteristics: proprietary frameworks, unusual patterns, domain-specific conventions. Test all three models on representative samples from your actual work.

Step 3: Measure Cost Per Successful Outcome

If Opus completes a task in one attempt that Sonnet requires three iterations to complete, the cost difference narrows. If Haiku handles 90% of your volume at 20% of Sonnet's cost, the savings compound regardless of the 10% requiring escalation.

Step 4: Build Routing, Not Defaults

The optimal architecture isn't "always use Sonnet" or "default to Opus." It's intelligent routing based on task characteristics, as demonstrated in the code examples above.

In a nutshell;

Use Haiku 4.5 for code review, documentation, linting, and high-volume tasks where speed matters more than marginal accuracy gains.

Use Sonnet 4.5 for multi-file changes, debugging, architecture decisions, and general-purpose coding work. This is Anthropic's official recommendation for most developers.

Use Opus 4.5 for large-scale refactoring, multi-agent orchestration, novel problem-solving, and high-stakes tasks where errors cost more than the 5x price premium.

The real question behind model selection

"Which Claude model is best for coding?" assumes a universal answer exists. It doesn't.

The question that matters is: what task complexity does your workflow actually require? Benchmark scores measure model capability ceilings. Production workflows operate well below those ceilings most of the time.

At DataAnnotation, our experts evaluate AI outputs daily: determining whether responses meet quality thresholds, identifying failure modes, and providing the human judgment that training data requires. This work demands a deep understanding of both AI capabilities and their limitations.

Coding projects on our platform involve evaluating AI-generated code across Python, JavaScript, C++, SQL, and other languages: assessing correctness, identifying bugs, and providing the expert signals that train next-generation models.

This isn't data labeling but more about contributing to the evaluation infrastructure that makes AI systems actually work.

DataAnnotation stays selective to maintain quality standards. You can only take the Starter Assessment once, so read the instructions carefully and review before submitting. There’s no signup fees.

Apply to DataAnnotation if you understand why expert judgment matters in AI development, and you have the technical depth to contribute.

Frequently Asked Questions

Which Claude model is best for coding beginners?

Claude Sonnet 4.5 is the best starting point. It offers the best balance of capability and cost, handles most coding tasks effectively, and is Anthropic's official recommendation for developers who are unsure which model to use.

Is Claude Opus 4.5 worth the extra cost for coding?

Only for specific use cases. Opus excels at large-scale refactoring, multi-agent orchestration, and high-stakes code where errors are expensive. For routine coding tasks, the 7.6% accuracy improvement over Haiku rarely justifies the 5x cost increase.

Can Claude Haiku 4.5 handle real coding tasks?

Yes. Haiku 4.5 scores 73.3% on SWE-bench Verified, which, according to Anthropic, "would have been state-of-the-art on internal benchmarks just six months ago." It achieves 90% of Sonnet's performance on agentic coding evaluations at one-third the cost.

What's the difference between Claude context windows for coding?

All three models support 200K tokens (approximately 150,000 words or 500 pages of code). Sonnet 4.5 additionally supports a 1M token context window in beta for organizations in usage tier 4.

What are the exact model IDs for Claude coding models?

.jpeg)