A well-known truth is that even exceptional engineers with stellar careers often fail FAANG interviews, despite years of shipping production code. This is because FAANG interviews exercise an entirely different muscle than day-to-day architectural work.

The stakes are high. Google's acceptance rate is estimated between 0.2% to 0.5%, with roughly 20,000 hires from over 3 million annual applicants, while fewer than 5% of candidates who reach Meta's onsite walk away with an offer.

Closing that gap requires 3 to 6 months of focused preparation. You must code accurately while narrating your thoughts, navigate ambiguity in minutes, and keep edge cases top of mind — all under an unforgiving clock while someone watches every keystroke.

This guide breaks down what FAANG actually evaluates at senior levels and gives you concrete preparation steps that work for experienced engineers.

1. Understand what FAANG tests in coding rounds

Senior-level coding interviews at Google or Amazon operate with different expectations than junior screens. Interviewers assume basic competency — they're not testing whether candidates can implement binary search.

They're evaluating how quickly engineers can decompose ambiguous problems, explain approaches before coding, and produce production-ready solutions under time constraints.

The four dimensions that separate senior from junior performance

Here's what matters: Would your interviewer trust you to write production code unsupervised? Every decision, every silence, every variable name feeds that judgment.

The evaluation happens across four dimensions that distinguish senior from junior performance:

- Problem decomposition speed: How rapidly can candidates slice vague prompts into solvable chunks without getting lost in details?

- Communication clarity: Do they outline approach, edge cases, and trade-offs before touching the keyboard, or do they code silently for twenty minutes?

- Code quality under pressure: Would this code pass PR review with proper variable names, helper functions, and defensive edge case handling?

- Trade-off reasoning: Can they justify choosing O(n log n) over O(n) when memory is constrained, or explain shipping brute force first, then optimizing?

These dimensions matter because they predict how you'll perform in ambiguous production situations where nobody hands you requirements in LeetCode format.

Interview format stays consistent across levels

The format stays consistent: one to two medium-to-hard LeetCode-style problems in shared editors, each lasting 45 minutes. Staff-level rounds offer less hand-holding and skip warm-up questions.

You're expected to recognize patterns instantly and implement solutions that merge into the main branch without revision.

What production engineers often miss: years of context-rich work make you accustomed to "good enough" code that teammates understand because they know the system. FAANG strips that context away.

Your code must be self-documenting because the interviewer has zero system knowledge. This is why senior engineers with perfect track records fail — they've optimized for velocity over clarity, and interviews test the opposite.

2. Build your problem pattern recognition library

FAANG coding rounds test pattern recognition speed more than algorithm memorization. Senior engineers who master core patterns consistently outperform those who grind random problems because time pressure rewards instant recognition over exhaustive preparation.

Pattern recognition works like debugging production issues

Think about how you debug production issues. You don't analyze every possible failure mode — you recognize symptoms instantly because you've seen similar patterns hundreds of times. Memory leak? Check allocations. Slow query? Look at the indexes.

FAANG interviews work identically: pattern recognition eliminates the cognitive load of "what should I try?" and lets you focus on implementation details.

The core patterns that repeat across interviews

The patterns themselves are familiar:

- Two pointers and sliding windows for arrays

- Fast and slow pointers for cycle detection

- BFS and DFS for trees and graphs

- Dynamic programming in one and two dimensions

- Hash maps for O(1) lookups, heaps for top-K problems

- Backtracking for combinations

The difference between knowing these patterns academically and deploying them under pressure is treating them as a connected toolkit rather than isolated tricks.

Building instant recognition through clustered practice

You should group practice problems by pattern and solve five to seven variations consecutively. This clustering builds instant recognition when similar structures appear under interview pressure. Focus on medium difficulty — these dominate actual interviews far more than easy or hard problems.

After each practice session, document three elements:

- The pattern itself

- Common edge cases that trip people up

- Time-space complexity trade-offs

This documentation habit builds the same critical thinking and attention to detail that AI training work demands — the ability to systematically break down complex problems and articulate your reasoning.

Write down pattern triggers explicitly: "If problem mentions finding pairs summing to target, try two pointers. If it requires all combinations, think backtracking."

The common mistake is jumping from one random problem to another without consolidating learning. Scattered practice kills the instant recognition these interviews demand.

3. Practice communicating your thought process out loud

Silent coding kills otherwise perfect solutions at senior levels. Interviewers evaluate the reasoning process as carefully as they do the final answers because they're hiring for judgment, not just implementation ability.

Think about how this differs from production work.

When you write code at your job, you think internally, implement, and then explain decisions in PR descriptions after the fact. Interviews reverse this sequence: explain first, implement second, validate third.

This feels unnatural because production engineering rewards efficient execution over constant verbalization.

What interviewers listen for

Interviewers listen for specific signals demonstrating senior-level thinking:

- Straightforward high-level approach before coding: Can you articulate the plan in plain language before writing code?

- Verbal checkpoints for edge cases: Do you verbalize edge cases (null inputs, empty arrays, boundary conditions) without prompting?

- Explicit trade-off justification: Can you justify trade-offs explicitly by explaining why this data structure over alternatives, why O(n log n) is acceptable here?

- Transparent debugging process: When stuck, do you narrate your troubleshooting approach or go silent while scrambling?

The skill isn't natural commentary on every line — that's annoying. It's purposeful checkpoints that demonstrate systematic thinking.

The five-step communication framework

Use this five-step framework to maintain purposeful communication throughout the session:

- Clarify the problem by restating it and confirming constraints

- Propose a high-level approach in plain language before writing code

- Compare alternatives and state the expected time-space complexity

- Code while narrating significant decisions (not every line, just key choices)

- Walk through test cases explaining expected results at each step

This framework ensures you hit the communication checkpoints interviewers expect without over-narrating every keystroke.

Training verbal fluency before interview day

Train this habit through recorded practice sessions that focus on communication gaps, mock interviews with harsh feedback, or by explaining LeetCode solutions to non-technical friends who catch unclear reasoning.

Staff-level communication sounds like this: "We need O(k) memory because the hash map stores each unique ID once. A brute-force double loop runs in O(n²), but a sliding window achieves O(n). Let me outline window logic first, implement it, then test with empty input and maximum size cases to confirm boundary handling."

The gap between strong engineers and strong interviewees often comes down to comfort with verbalization. Production work conditions you to think deeply and speak concisely. Interviews demand the opposite: think aloud constantly so the interviewer can follow your reasoning even when you take wrong turns.

4. Master the first 5 minutes of every coding interview

The opening minutes set the tone for the entire interview. Senior candidates who dive straight into code without clarifying assumptions signal haste rather than mastery — a red flag that undermines otherwise solid technical performance.

FAANG interviewers use this window to evaluate whether candidates can navigate ambiguity, structure problems methodically, and communicate like trusted peers on high-stakes projects. The engineers who advance are those who demonstrate deliberate problem analysis before touching the keyboard.

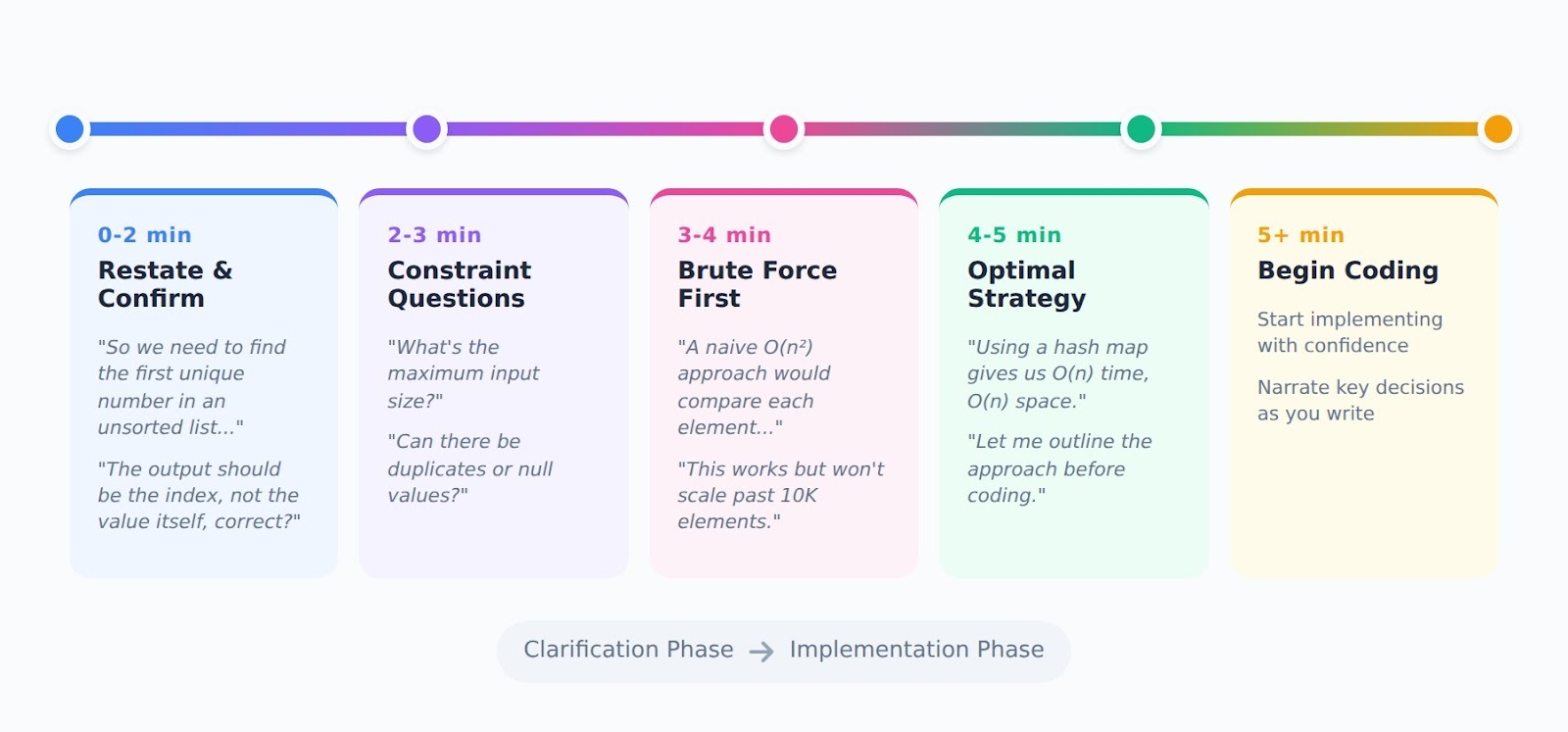

The minute-by-minute breakdown

A disciplined framework keeps focus during these critical opening moments:

- Minutes 1-2: Restate the problem in your own words, translating the interviewer's description into a clear input-output format and confirming understanding

- Minutes 2-3: Ask pointed questions about constraints — maximum input size, duplicate handling, data types, whether arrays come presorted to prevent wasted effort later

- Minutes 3-4: Outline the brute force approach first, then quickly analyze its complexity to show you understand the problem before optimizing

- Minutes 4-5: Present optimal strategy, sketching key data structures and noting expected complexity before touching keyboard

This structure demonstrates systematic thinking while building shared understanding with your interviewer.

Questions that signal production thinking

Senior engineers should always ask these questions to demonstrate production thinking:

- What are upper bounds on n and m? (Reveals whether O(n²) is acceptable or requires optimization)

- Can inputs contain duplicates or nulls? (Tests defensive programming mindset)

- Is in-place modification acceptable, or should original data remain untouched? (Shows API design awareness)

Effective openings sound like this: "Let me confirm understanding. We receive an unsorted list of up to one million integers and need the first unique number. Scanning once with a hash map gives O(n) time and O(n) space; a naive double loop would be O(n²). Can we assume the list fits in memory and integers fit in 32 bits?"

This approach clarifies the scope, provides a baseline solution, and outlines an efficient path forward — all before writing code.

Why clarification feels wasteful but isn't

What makes this hard for experienced engineers: production work trains you to start coding quickly because context exists in Jira tickets, design docs, and team knowledge. The constraint-gathering mindset you develop here also applies to remote AI training work, where project instructions define boundaries but your judgment fills in the gaps.

You're accustomed to asking questions async in Slack, not blocking on complete requirements.

Interviews have no async — you get 45 minutes, and spending five minutes on clarification feels wasteful when the clock is ticking. But those five minutes determine whether you spend the next forty implementing the right solution or discovering halfway through that you misunderstood the constraints.

Practice this five-minute routine until it becomes automatic. Record yourself solving problems and watch how long you actually spend on clarification versus how long you think you spent. Most engineers discover they ask one or two questions, then dive into code, hoping they guessed constraints correctly.

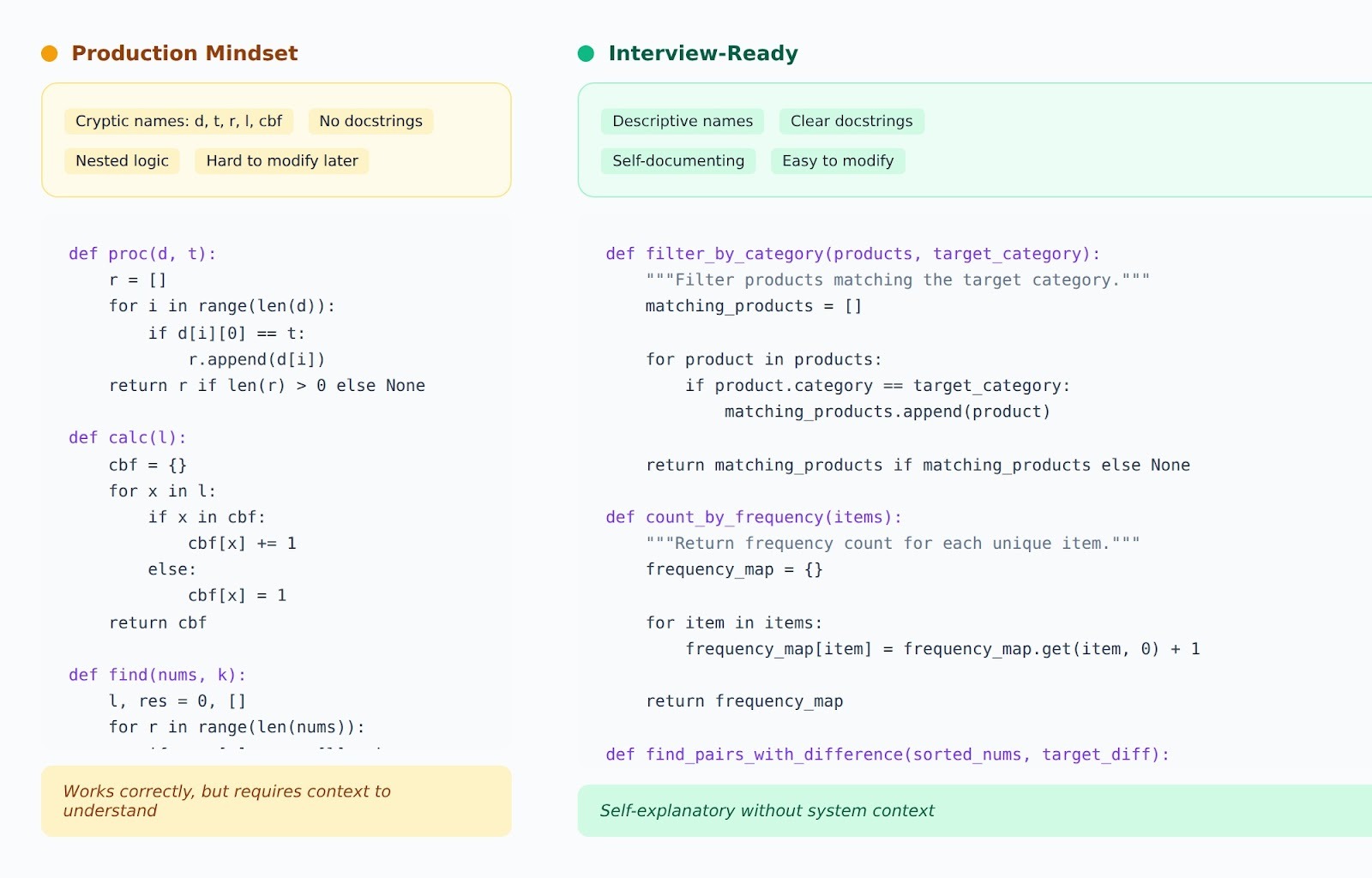

5. Write clean, production-quality code under time pressure

Senior-level interviewers assume algorithmic competency. What actually distinguishes candidates is whether their code would survive PR review — not just work, but be maintainable by others who lack the context you have in your head.

This is where production engineers often fail. You've spent years shipping features where "good enough" passes review because teammates understand the system, variable names can be terse because everyone knows what ctx means. Helper functions feel like over-engineering for a minor feature.

FAANG interviews remove that shared context entirely. Your code must be self-documenting because the interviewer has zero system knowledge.

Quality signals interviewers watch for

Production-ready code in interviews requires specific attention to quality signals that feel excessive under time pressure but actually determine your level:

- Descriptive variable and function names: For instance, use countByFrequency, not cbf, leftPointer, not l (single letters acceptable only for loop counters)

- Explicit edge case handling: Check for empty inputs, null pointers, integer overflow, and boundary conditions early

- Logical code organization: Extract repeated logic into helper functions instead of nesting everything in a hundred-line primary method

- Consistent style: Maintain proper indentation, bracing, and spacing that matches professional codebases

- Strategic commenting: Add comments only for non-obvious logic, avoiding line-by-line narration

These signals matter because they demonstrate you think about the engineers who'll maintain your code months after you ship it.

Time allocation under pressure

Time pressure demands deliberate budgeting. Allocate a few minutes to clarifying constraints, another minutes to discussing trade-offs, another few minutes to implementing the solution, and then some for testing and polishing.

This prevents getting stuck on perfect variable names while leaving no time for validation that catches obvious bugs.

Here's what separates senior performance from junior: juniors write correct code that works. Seniors write correct code that others can modify six months later without reverse-engineering intent.

For instance, when you write result.append(nums[i]) versus validPairs.append(leftValue), you're signaling whether you think about maintainability or just passing tests.

Common mistakes that tank strong engineers

Here are common mistakes that tank otherwise strong engineers:

- Writing correct but unreadable code because "it's just a coding interview." — This signals you don't think about teammates who read your PRs.

- Skipping edge case validation because "the problem didn't mention it" — production code assumes inputs are hostile, not friendly.

- Nesting five levels deep instead of extracting helpers because "I'm saving time" — complexity that requires mental stack overflow to parse fails the maintainability test.

During actual interviews, prove you can write code that ships to production. Not code that works in isolation, but code that junior engineers on your team could modify without asking what every variable represents. That's the senior bar.

Your code review instincts have value beyond interviews. DataAnnotation pays $40+ per hour for developers to train frontier AI models. Apply today.

6. Prepare for system design disguised as coding questions

Solving the algorithm perfectly only to hear "Great. Now make this work for a billion rows" isn't a random pivot — it's planned evaluation. Senior-level coding rounds deliberately blend into system design to test whether you think beyond algorithms to production reality.

These pivots evaluate understanding of distributed systems, concurrency, caching, and streaming — the skills that actually define staff engineers at companies like Google and Amazon.

Your LeetCode solution might be algorithmically optimal, but if you can't explain how it scales to production traffic, you're signaling mid-level thinking at a staff-level interview.

Common production-scale follow-ups

Common follow-up patterns that test production thinking include:

- "What if the input doesn't fit in memory?" Tests distributed systems understanding and data partitioning strategies

- "How would you make this thread-safe?" Evaluates concurrency awareness and knowledge of locks, atomics, or lock-free structures

- "Can it handle 100K queries per second without performance degradation?" Probes caching strategies, load balancing, and horizontal scaling knowledge

- "How would you modify this for streaming data instead of batch?" Tests real-time processing, understanding, and stateful stream handling

These questions reveal whether you've actually scaled systems in production or just implemented features in someone else's architecture.

Building the architectural mindset

Build the habit of extending every practice problem to production scale. After solving any problem, invest two minutes in considering memory footprints at a billion-record scale, latency requirements for user-facing requests, and failure modes and how to handle them gracefully. This mental muscle develops through repetition until architectural thinking becomes reflexive.

Sketch quick architectures even when problems don't explicitly ask for them. Perhaps sharded storage for horizontal scaling, write-through cache for read-heavy workloads, and idempotent queue workers for reliable processing.

Practice until pivoting from algorithm to architecture feels natural rather than jarring.

What separates staff from senior responses

The transition should sound like: "For billion-row scale, I'd batch writes in chunks of 10K, stream through Kafka for durability, and process via parallel workers with checkpointing for failure recovery. Hot keys live in Redis for sub-millisecond reads, while cold data sits in Bigtable. We'd monitor lag via Prometheus and auto-scale consumers based on queue depth."

Compare that with responses that tank otherwise strong candidates:

- "I'd just increase the VM size" shows no understanding of horizontal scaling or distributed systems

- "We'd buy more servers" ignores cost optimization and architectural efficiency.

- "Cache everything" reveals no consideration of invalidation, consistency, or cache-penetration attacks.

These answers signal you've never scaled systems in production, even if your algorithm is perfect.

What makes this hard for experienced engineers: if you've spent years in a single codebase, you may have deep knowledge of one system's scaling patterns without a broad understanding of alternatives.

FAANG interviews test breadth. If your entire career has involved monolithic databases, you need a deliberate study of distributed patterns before staff-level interviews.

7. Maintain technical sharpness between interview rounds

Technical skills atrophy during extended job searches, and the atrophy hits hardest in areas that interviews test most: rapid problem decomposition, clean code under pressure, and articulating trade-offs without preparation time.

You need active practice that mirrors interview conditions rather than passive review of algorithms you already understand.

The atrophy problem for senior engineers

The specific challenge for senior engineers: production work doesn't require whiteboard coding or 45-minute problem sprints. You spend days on architectural decisions, hours reviewing PRs, minutes in meetings discussing trade-offs—but almost no time implementing unfamiliar algorithms while someone watches.

The skills interview tests are orthogonal to skills that make you valuable in senior roles, which is why strong engineers often fail despite excellent track records.

How coding evaluation mirrors interview skills

Code evaluation work provides this specific training because it exercises identical muscles. When you assess AI-generated code, you practice the exact skills interviewers test.

At DataAnnotation, we pay $40+ per hour for coding projects, which validates you're practicing technical judgment rather than wasting time on irrelevant prep.

You control the schedule entirely: log in after dinner for an hour, work eight hours on Saturday, or maintain steady evening practice throughout your search.

You'll evaluate Python code for errors, fix AI-generated JSON files, and assess technical decisions with real consequences. You identify bugs, assess solution quality, and articulate why specific approaches succeed or fail—the identical skills system design rounds test.

Each project drops you into fresh evaluations, practicing the rapid context-switching you'll face when interviewers hand over design documents or broken services.

Contribute to AGI development at DataAnnotation

FAANG staff-level interviews require different strategies than earlier-career applications. The extended timeline creates a specific challenge: maintaining technical sharpness between interview rounds while managing your current role.

Coding evaluation work at DataAnnotation solves this problem. The fact that it pays $40+ per hour for coding projects validates that you're practicing technical judgment instead of wasting time on irrelevant prep.

Getting from interested to earning takes five straightforward steps:

- Visit the DataAnnotation application page and click "Apply"

- Fill out the brief form with your background and availability

- Complete the Starter Assessment, which tests your critical thinking and coding skills

- Check your inbox for the approval decision (typically within a few days)

- Log in to your dashboard, choose your first project, and start earning

No signup fees. DataAnnotation stays selective to maintain quality standards. You can only take the Starter Assessment once, so read the instructions carefully and review before submitting.

Start your application at DataAnnotation today and practice the rapid context-switching that FAANG interviews demand.

.jpeg)