Yes. DataAnnotation is a legitimate AI training platform that pays workers to help improve frontier AI models.

A few important notes upfront: There are DataAnnotation impersonators. To protect yourself, remember that we will never request payment from you — we pay you, not the other way around. Only apply through our official website, and verify you're on the correct domain before entering any information.

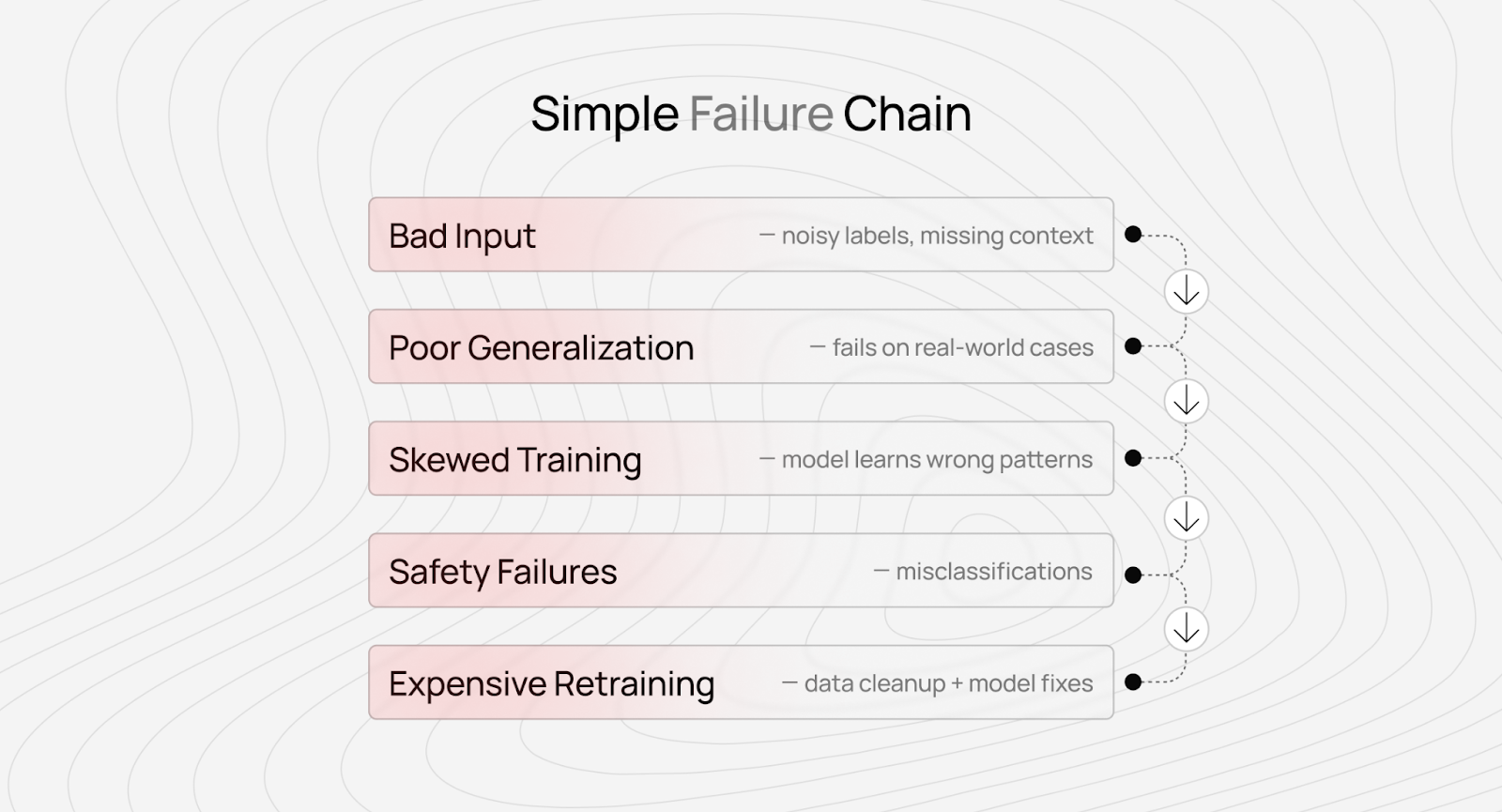

Most AI training platforms operate as "body shops": spreadsheets and recruiting pipelines with no way to measure whether the data improves or degrades models. They treat workers as interchangeable and pay them accordingly.

DataAnnotation exists because frontier AI development requires something different. The gap between "I can label images" and "I can teach models to reason" determines which platforms advance AI capabilities and which waste resources. We built technology that makes quality measurable—and we pay for expertise because that's what this work requires.

This article explains what AI training actually involves, why quality beats volume, and what we look for in contributors.

What is AI training, and how is the DataAnnotation approach different?

At DataAnnotation, we built something different: technology that makes quality measurable, qualification systems that validate expertise through demonstrated performance, and advanced standards that filter for workers who understand why this work matters for advancing AI.

AI training isn't mindless data entry. It's teaching models to think, reason, and create at levels that approach or exceed human capability.

The distinction matters because it determines everything: what the work requires, how quality gets measured, and whether platforms advance model capabilities or waste resources optimizing the wrong objectives.

When you ask someone to draw a bounding box around a car in an image, the quality ceiling is low — a second-grader and a Stanford PhD will produce nearly identical results.

However, when you ask someone to write poetry about moonlight, prove the Pythagorean theorem, or evaluate whether an AI's explanation of quantum mechanics contains subtle errors, the ceiling becomes unlimited.

AI training vs. data labeling

Most platforms were designed for the bounding box era: simple classification tasks where inter-annotator agreement serves as a proxy for quality. But as models advance beyond basic perception into reasoning, creativity, and domain expertise, that approach breaks.

You can't take a majority vote on which poem is better. You can't average preferences for mathematical proofs. Quality becomes subjective, contextual, and impossible to measure through simple agreement metrics.

This is where AI training diverges from data labeling: the work requires judgment that automation can't replicate and expertise that credentials alone don't guarantee.

For instance, a computational chemist with 10 years of industry experience might produce better training data than a chemistry PhD from an elite university who's never worked outside academia. An English literature PhD might write generic, uninspired poetry, while someone who never finished college produces work that Nobel laureates would admire.

The gap between platforms that advance frontier models and those that waste compute on benchmark hacking lies in how they handle this complexity.

Why frontier AI needs better data (and what it means for you)

Most AI training platforms don't have technology — they have spreadsheets and recruiting pipelines. They dump data into Google Sheets, hire anyone with a relevant degree, and pass workers to AI companies with no way to measure whether the resulting data improves models or degrades them.

This matters because volume without quality measurement wastes everyone's time. AI labs have spent months gathering 10 to 20 million synthetic training examples, only to discover 95% were redundant noise. Meanwhile, a thousand strategically curated examples from domain experts can outperform the entire synthetic dataset by capturing edge cases and subtle reasoning that automation can't generate.

The "body shop" approach treats humans as interchangeable units rather than sources of irreplaceable expertise. When platforms can't measure quality in real-time, they can't distinguish between top performers producing transformative insights and those producing noise. The result: data that teaches models to optimize for the wrong objectives—acing benchmarks by generating longer responses rather than improving reasoning.

For you, this means your actual expertise often goes unrecognized and unrewarded. The current system buries exceptional work in a sea of mediocrity, with no way to surface it.

What does quality AI training work require?

AI training work requires expertise that credentials can't proxy and judgment that automation can't replicate.

Consider what frontier model training demands:

- Evaluating whether an AI's explanation of a physics problem contains subtle conceptual errors

- Writing code that demonstrates elegant solutions to algorithmic challenges

- Creating poetry that exhibits genuine creativity rather than generic patterns

- Assessing whether a model's medical reasoning would lead to correct diagnoses in edge cases

These tasks require deep domain knowledge and critical thinking to spot what's missing in superficially correct answers.

Key requirement: expertise plus street smarts

We've found that some computer science PhDs produce mediocre code when asked to evaluate AI programming assistance — their training emphasized theory over craft, and many struggle with the practical engineering judgment that separates functional code from excellent code.

English literature PhDs often write stilted, overly academic prose when asked to create training data for creative writing models. Medical doctors with decades of clinical experience sometimes lack the systematic reasoning that makes their knowledge transferable to AI systems.

What actually predicts quality?

A combination of domain expertise, creativity, and what we call street smarts — the practical judgment that comes from applying knowledge in real-world contexts rather than purely academic settings.

From testimonials we’ve seen, here’s what’s possible:

- The computational chemist who spent 10 years optimizing pharmaceutical compounds has intuitions about molecular behavior that no amount of coursework can teach.

- The programmer who's debugged thousands of production issues can spot subtle errors that someone with a perfect GPA might miss.

- The writer who's published novels understands narrative structure in ways that literary analysis can't capture.

The work also requires mental fortitude to solve problems that take time to resolve correctly. AI training isn't piecework you complete during commercial breaks — it's deep intellectual engagement that demands sustained focus and genuine expertise.

Why your work at DataAnnotation matters: contributing to frontier AI development

This work matters because it advances the most crucial technology being built today — Artificial General Intelligence (AGI).

Every major AI lab building frontier models (the systems pushing toward AGI) relies on high-quality training data that only human expertise can provide.

When these companies launched new models that could reason about complex scientific problems, write sophisticated code, or engage in creative tasks that seemed impossible months earlier, that advancement came from training data that was measured, validated, and continuously improved.

We've had AI researchers tell us they couldn't have achieved their breakthroughs without the training data we provided. Our focus stays on work that requires the expertise, creativity, and critical thinking that separates advancing frontier models from optimizing benchmarks.

This mission-driven approach means workers aren't just completing tasks for hourly pay — they're contributing to systems that will eventually cure diseases, accelerate scientific discovery, and solve problems that human intelligence alone couldn't crack.

Who this work isn’t for

This work isn't for everyone — and that's intentional.

We maintain selective standards because quality at the frontier scale requires genuine expertise, not just effort. If you're exploring AI training work because you heard it's easy money that anyone can do, we’re afraid, this isn't the right platform.

If you're looking to maximize hourly volume through minimal-effort clicking, there are commodity platforms better suited to that approach. If credentials matter more to you than demonstrated capability, our qualification process can discourage you.

Who this work is for

Our quality AI training work is for:

Domain experts who want their expertise to matter: For instance, computational chemists who are tired of pharmaceutical roles where their knowledge gets underutilized. Mathematicians seeking intellectual engagement beyond teaching introductory calculus. Programmers who want to apply their craft to advancing AI rather than debugging legacy enterprise software.

Professionals who need flexible income without sacrificing intellectual standards: For example, the researcher awaiting grant funding who can contribute to frontier model training while maintaining their primary focus. The attorney with reduced hours who can apply legal reasoning to AI safety problems. The STEM professional who needs work without geographic constraints.

Creative professionals who understand craft: Examples include writers who can distinguish between generic AI prose and genuinely compelling narratives. Poets who recognize that technique without creativity produces mediocre work, regardless of formal training.

People who care about contributing to AGI development: Workers who understand that training frontier models matters more than optimizing their personal hourly rate. Experts who recognize that their knowledge becomes exponentially more valuable when transferred to AI systems that operate at scale.

The poetry you write teaches models about creativity and language. The code you evaluate helps them learn software engineering judgment. The scientific reasoning you demonstrate advances their capability to assist with research.

Qualification system

At DataAnnotation, we operate through a tiered qualification system that validates expertise and rewards demonstrated performance.

Entry starts with a Starter Assessment that typically takes about an hour to complete. This isn't a resume screen or a credential check — it's a performance-based evaluation that assesses whether you can do the work.

Pass it, and you enter a compensation structure that recognizes different levels of expertise:

- General projects: Starting at $20 per hour for evaluating chatbot responses, comparing AI outputs, and writing challenging prompts

- Multilingual projects: Starting at $20 per hour for translation and localization work across many languages

- Coding projects: Starting at $40 per hour for code evaluation and AI performance assessment across Python, JavaScript, HTML, C++, C#, SQL, and other languages

- STEM projects: Starting at $40 per hour for domain-specific work requiring bachelor's through PhD-level knowledge in mathematics, physics, biology, and chemistry

- Professional projects: Starting at $50 per hour for specialized work requiring credentials in law, finance, or medicine

Once qualified, you select projects from a dashboard showing available work that matches your expertise level. Project descriptions outline requirements, expected time commitment, and specific deliverables.

You can choose your work hours. You can work daily, weekly, or whenever projects fit your schedule. There are no minimum hour requirements, no mandatory login schedules, and no penalties for taking time away when other priorities demand attention.

The work here at DataAnnotation fits your life rather than controlling it.

Contribute to the AI training frontier at DataAnnotation

If our approach to AI training resonates with you, the application process is straightforward:

- Visit the DataAnnotation application page and click “Apply”

- Fill out the brief form with your background and availability

- Complete the Starter Assessment (about an hour)

- Check your inbox for the approval decision, which typically arrives in the next few days

- Log in to your dashboard, choose your first project, and start working

No signup fees. We stay selective to maintain quality standards. Just remember: you can only take the Starter Assessment once, so prepare thoroughly before starting.

Apply to DataAnnotation if you understand why quality beats volume in advancing frontier AI — and you have the expertise to contribute.